Two streams on Safari

- Thread starter neogeo

- Start date

You can play two streams on Mac OS Safari, where Adobe Flash Player is enabled and working.

You can't play two streams on iOS Safari because this browser works over Websockets and designed to play exactly one entire audio+video stream.

However we have another possible option. It seems we can do screen sharing capture with microphone in one WebRTC stream. But we have to check this option on our API.

If we have a working sample, we will share it.

You can't play two streams on iOS Safari because this browser works over Websockets and designed to play exactly one entire audio+video stream.

However we have another possible option. It seems we can do screen sharing capture with microphone in one WebRTC stream. But we have to check this option on our API.

If we have a working sample, we will share it.

Yes. On Mac Safari you need Adobe Flash Player to play two or more streams:So, two streams on Mac Safari can be played only through Flash, not through websocket, right?

Example: https://wcs5-eu.flashphoner.com/demo2/2players

swf object will be embedded into each of these div blocks for audio or video playback

Signaling will still work over wss (websockets).

Sure. I will inform you once we have any progress.Please let us know when you have a working sample. This will have great impact to our service.

Hello,

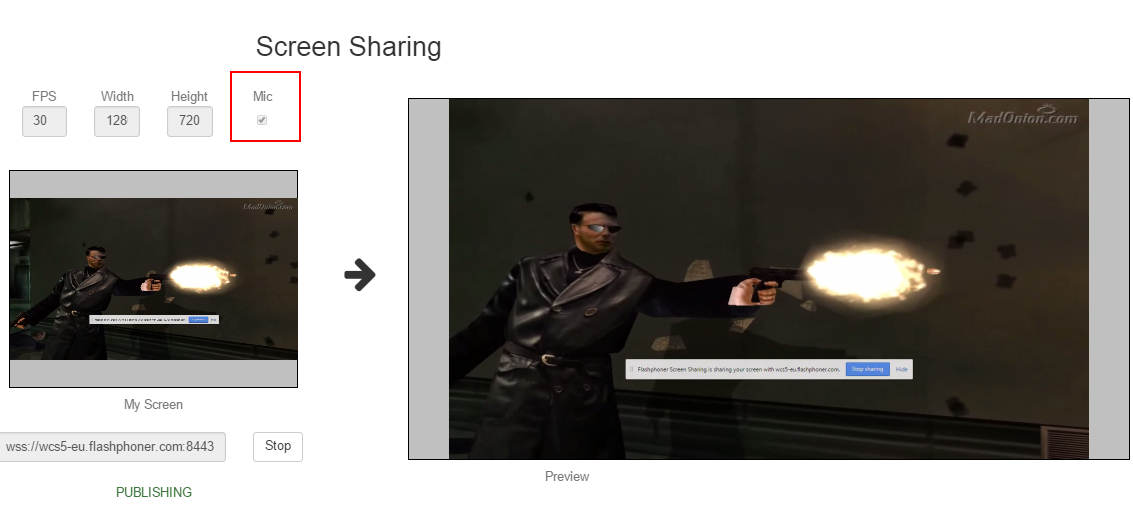

We have added option to stream Screen sharing + Microphone.

So you will be able to publish video of screen and audio in one WebRTC stream.

Please check our latest demo example:

https://wcs5-eu.flashphoner.com/demo2/screen-sharing

As you can see you can set Mic checkbox to use microphone input.

You have to update to the latest available Web SDK build.

Changes:

https://github.com/flashphoner/flashphoner_client/commit/5cc58de6bd3a363201290fa5294155a2edd077fb

https://github.com/flashphoner/flashphoner_client/commit/c34606d85a7a928c2b9c90e2befe2e457a9b28cf

We have added option to stream Screen sharing + Microphone.

So you will be able to publish video of screen and audio in one WebRTC stream.

Please check our latest demo example:

https://wcs5-eu.flashphoner.com/demo2/screen-sharing

As you can see you can set Mic checkbox to use microphone input.

You have to update to the latest available Web SDK build.

Changes:

https://github.com/flashphoner/flashphoner_client/commit/5cc58de6bd3a363201290fa5294155a2edd077fb

https://github.com/flashphoner/flashphoner_client/commit/c34606d85a7a928c2b9c90e2befe2e457a9b28cf

Hello,

on your sample on https://wcs5-eu.flashphoner.com/client2/examples/demo/streaming/player/player.html

you seem to always specify the playWidth and playHeight variables. Is this a prerequisite?

I tried to remove those variables and it won't work on devices with safari browser

on your sample on https://wcs5-eu.flashphoner.com/client2/examples/demo/streaming/player/player.html

you seem to always specify the playWidth and playHeight variables. Is this a prerequisite?

I tried to remove those variables and it won't work on devices with safari browser

When you set resolution to 0x0, player uses source video resolution.

For example, if your publisher has resolution 800x600, the player will play this resolution without changes.

When you set particular resolution, for example 640x480, server will transcode and rescale publisher video to requested resolution, for example 800x600 (publisher) to 640x480 (requested).

iOS Safari on mobile devices does not support high resolutions because high resolutions affect performance of mobile device.

That's why we set playWidth, playHeight to 640x480 if iOS Safari browser detected (if WSPlayer media provider is used when WebRTC or Flash are unavailable).

For example, if your publisher has resolution 800x600, the player will play this resolution without changes.

When you set particular resolution, for example 640x480, server will transcode and rescale publisher video to requested resolution, for example 800x600 (publisher) to 640x480 (requested).

iOS Safari on mobile devices does not support high resolutions because high resolutions affect performance of mobile device.

That's why we set playWidth, playHeight to 640x480 if iOS Safari browser detected (if WSPlayer media provider is used when WebRTC or Flash are unavailable).

I understand, thank you for the quick response.

Let me describe my use case

I have a share-screen broadcaster who streams an variable size app view to iOS devices running safari.

If i specify the resolution (through playWidth and playHeight) the video looks distorted because it won't match source resolution/aspect ratio.

I guess i need a way to force maxWidth or maxHeight to player stream and let the flashphoner calculate the resulting video but unfortunately if i set only those (without playWidth and playHeight) the video doesn't play

Do you have a solution to this problem?

Let me describe my use case

I have a share-screen broadcaster who streams an variable size app view to iOS devices running safari.

If i specify the resolution (through playWidth and playHeight) the video looks distorted because it won't match source resolution/aspect ratio.

I guess i need a way to force maxWidth or maxHeight to player stream and let the flashphoner calculate the resulting video but unfortunately if i set only those (without playWidth and playHeight) the video doesn't play

Do you have a solution to this problem?

It seems we have to preserve ratio on transcoding.

For example

If the source WebRTC stream is 1280x720 (16:9) and if player requests 640x480, it should receive 640x360.

If the source WebRTC stream is 1024x768 (4:3) and if player requests 640x480, it should receive 640x480.

This correction is not currently implemented for streams under transcoding (WebRTC to iOS Safari).

I have added this issue into our tracker. I will inform you once we have any updates.

For now, you can use REST methods to know aspect ratio of published streams.

https://flashphoner.com/docs/wcs5/w...s/index.html?direct_invoke_scheme_of_work.htm

As you can see, WCS invokes publishStream REST methods and notifies your web server about stream resolution.

So you may know what resolution / ratio of the published stream, but it may take additional development from your end.

For example

If the source WebRTC stream is 1280x720 (16:9) and if player requests 640x480, it should receive 640x360.

If the source WebRTC stream is 1024x768 (4:3) and if player requests 640x480, it should receive 640x480.

This correction is not currently implemented for streams under transcoding (WebRTC to iOS Safari).

I have added this issue into our tracker. I will inform you once we have any updates.

For now, you can use REST methods to know aspect ratio of published streams.

https://flashphoner.com/docs/wcs5/w...s/index.html?direct_invoke_scheme_of_work.htm

As you can see, WCS invokes publishStream REST methods and notifies your web server about stream resolution.

So you may know what resolution / ratio of the published stream, but it may take additional development from your end.

I have already implement REST methods for authentication purposes and i can see in the logs that the resolution is always "width":0,"height":0

i sent you a sample of the request

i sent you a sample of the request

Attachments

-

4.1 KB Views: 323

We have modified video resize util.

As you can see from this commit:

https://github.com/flashphoner/flashphoner_client/commit/d62ed312929c205253c563db766212ad3e8b0ea4

We are able to get actual aspect ratio from stream.videoResolution() method:

So if we know actual ratio, we are able to resize canvas element (in the case of iOS Safari) to fit this ratio:

These changes are available in the Web SDK build:

https://flashphoner.com/downloads/b...95db66982e6cd467ed08d17d56a6570de75761.tar.gz

We have passed constraints object to REST.

Web SDK commmit:

https://github.com/flashphoner/flashphoner_client/commit/0054f0f7eeb60ae4d0bc3482e2fe81b8ed3d75be

Build:

https://flashphoner.com/downloads/b...54f0f7eeb60ae4d0bc3482e2fe81b8ed3d75be.tar.gz

REST Example:

As you can see from this commit:

https://github.com/flashphoner/flashphoner_client/commit/d62ed312929c205253c563db766212ad3e8b0ea4

We are able to get actual aspect ratio from stream.videoResolution() method:

Code:

var streamResolution = stream.videoResolution();

var ratio = streamResolution.width / streamResolution.height;

Code:

newSize = downScaleToFitSize(width, height, parentSize.w, parentSize.h);https://flashphoner.com/downloads/b...95db66982e6cd467ed08d17d56a6570de75761.tar.gz

We have passed constraints object to REST.

Web SDK commmit:

https://github.com/flashphoner/flashphoner_client/commit/0054f0f7eeb60ae4d0bc3482e2fe81b8ed3d75be

Build:

https://flashphoner.com/downloads/b...54f0f7eeb60ae4d0bc3482e2fe81b8ed3d75be.tar.gz

REST Example:

Code:

URL:http://localhost:9091/EchoApp/publishStream

OBJECT:

{

"nodeId" : "H4gfHeULtX6ddGGUWwZxhUNyqZHUFH8j@192.168.1.59",

"appKey" : "defaultApp",

"sessionId" : "/192.168.88.254:51094/192.168.88.59:8443",

"mediaSessionId" : "c7c0ca00-3ba6-11e7-bae0-b926f25ab319",

"name" : "4070",

"published" : true,

"hasVideo" : true,

"hasAudio" : true,

"status" : "PENDING",

"record" : false,

"width" : 0,

"height" : 0,

"bitrate" : 0,

"quality" : 0,

"mediaProvider" : "WebRTC",

"constraints" : {

"audio" : true,

"video" : {

"width" : 1280,

"height" : 720

}

}

}Hello and thank you for the quick addition.

i made my tests and the javascript part is working fine (stream.videoResolution()) but unfortunately the call to REST don't.

The javascript part though is what i really need so i'm ok.

If you still need feedback on REST please sent me a new version and i'll test it.

i made my tests and the javascript part is working fine (stream.videoResolution()) but unfortunately the call to REST don't.

The javascript part though is what i really need so i'm ok.

If you still need feedback on REST please sent me a new version and i'll test it.