Issue

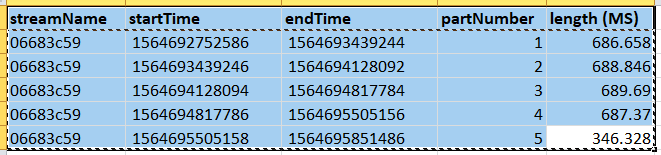

Recording files don't split at the correct time.

Environment

Server: c5.xlarge

WCS: 5.2.267-018743542d11cabedb2fdc2dd34e1fc68e676158

Publisher: Demo - Several Stream Recording (15 count)

flashphoner.properties

wcs-core.properties

records

Logs

Emailed (report_2019-08-01-21-50-15.tar.gz)

Recording files don't split at the correct time.

Environment

Server: c5.xlarge

WCS: 5.2.267-018743542d11cabedb2fdc2dd34e1fc68e676158

Publisher: Demo - Several Stream Recording (15 count)

flashphoner.properties

Code:

record_rotation=300

stream_record_policy_template={streamName}_{startTime}_{endTime}

Code:

### JVM OPTIONS ###

-Xmx6gLogs

Emailed (report_2019-08-01-21-50-15.tar.gz)